DOTENV_PATH = './.env'Part 1. Create the service and upload the pictures

Introduction

In this series of posts we are going to follow along the process and code required to train an object detection model using Azure Custom Vision (in its free tier).

We are going to use real world pictures compiled from work I have done over the years in Venezuela. In this kind of supervised learning problem we need tagged images. So we will use Smart Labeler to do that.

After the model is published in Azure service, we can use the API to build and share a demo with Gradio and Huggingface.

Here is the one that is already published for you to try:

Telecom-Object-Detection

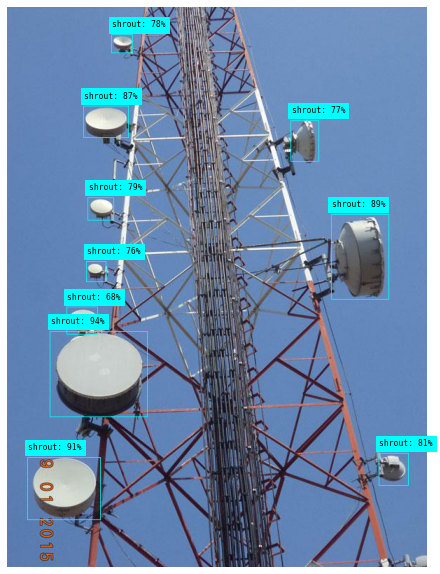

The model will be trained to detect the following objects:

- Grid Antenna

- Panel antenna

- Radome antenna

- RRU

- Shroud antenna

- Solid antenna

|

|

|

| Grid | Panel | Radome |

|

|

|

| RRU | Shroud | Solid |

Tutorial Parts

- Part 1:

- Creating a free Azure Custom Vision Service.

- Uploading the images to the service.

- Part 2 will cover:

- Analyzing what happens to the images after uploading.

- How to label the images using Smart Labeler

- Training and testing the model.

- Part 3 will cover:

- Create a Huggingface Gradio Demo.

References

- Microsoft Learn Excersice: Detect Objects in Images with Custom Vision

- Custom Vision Documentation: Quickstart: Create an object detection project with the Custom Vision client library

- REST API Endpoint: Custom Vision REST API reference - Azure Cognitive Services

- APIs Documentation: Custom_Vision_Training_3.3

- Azure SDK for Python: Custom Vision Client Library

- Source Code: Azure/azure-sdk-for-python

Part 1.1. Create a Custom Vision Service

I’m not going to get into the details of creating the service. And the reason is that there is a detailed tutorial covering not just that, but also the code for uploading and training a simple model. I encourage you to try it first:

Detect Objects in Images with Custom Vision

For this tutorial I created a Custom Vision with the following settings:

- Custom Vision service:

- Resource: ai102cvision

- Resource Kind: Custom Vision Training

- Project:

- Name: Telecom Equipment Detection

- Description: Detect different types of antennas

- Resource: ai102cvision [F0]

- Project Types: Object Detection

- Domains: General

Part 1.2. Upload the images

Environment variables

Update the configuration variables in the .env file that contains:

TrainingEndpoint=YOUR_TRAINING_ENDPOINT

TrainingKey=YOUR_TRAINING_KEY

ProjectID=YOUR_PROJECT_IDIn order to protect my credentials, I’m going to store .env file in a creds folder that isn’t being pushed to github.

Install and import libraries

We need to install Custom Vision’s Python SDK and python-dotenv:

! pip install azure-cognitiveservices-vision-customvision==3.1.0

! pip install python-dotenv

from azure.cognitiveservices.vision.customvision.training import CustomVisionTrainingClient

from azure.cognitiveservices.vision.customvision.training.models import ImageFileCreateBatch, ImageFileCreateEntry, Region

from msrest.authentication import ApiKeyCredentials

import time

import json

import os

import pandas as pd

from dotenv import load_dotenv

from pathlib import Path

from PIL import Image, ImageOps

from PIL import UnidentifiedImageError

import matplotlib.pyplot as pltCredentials and services

load_dotenv(DOTENV_PATH)

training_endpoint = os.getenv('TrainingEndpoint')

training_key = os.getenv('TrainingKey')

project_id = os.getenv('ProjectID')

credentials = ApiKeyCredentials(in_headers={"Training-key": training_key})

training_client = CustomVisionTrainingClient(training_endpoint, credentials)

custom_vision_project = training_client.get_project(project_id)Functions

# Borrowed from fastai library

def verify_image(fn):

"Confirm that `fn` can be opened"

try:

im = Image.open(fn)

im.draft(im.mode, (32,32))

im.load()

return True

except: return False

#except PIL.UnidentifiedImageError:The SDK / API allows to upload images in batches but I didn’t find a way to match the local image name with the id generated by the service. Then I opted to create a function that uploads the pictures one by one.

def Upload_Images_1by1(pictures: list[Path]) -> list('dict'):

"""Upload the pictures from a list of paths,

one by one to keep track of the relation between

local image name and Azure image id.

And to track the ones that python fails opening

"""

print("Uploading images...")

processed_ids = []

processed_status = []

picture_names = []

for pic_path in pictures:

if verify_image(pic_path):

with open(pic_path, mode="rb") as image_data:

image_entry = ImageFileCreateEntry(

name=pic_path.name, contents=image_data.read()

)

# Upload the list of (1) images as a batch

upload_result = training_client.create_images_from_files(

custom_vision_project.id,

# Creates an ImageFileCreateBatch from a list of 1 ImageFileCreateEntry

ImageFileCreateBatch(images=[image_entry])

)

# Check for failure

if not upload_result.is_batch_successful:

pic_status = upload_result.images[0].status

pic_id = None

else:

pic_status = upload_result.images[0].status

pic_id = upload_result.images[0].image.id

else:

pic_status = "ReadError" # Equivalente to SDK `ErrorSource`

pic_id = None

processed_status.append(pic_status)

processed_ids.append(pic_id)

picture_names.append(pic_path.name)

print(pic_path.name, "//", pic_id, "//", pic_status)

return {"image_name": picture_names,

"image_id": processed_ids,

"image_status": processed_status}Explore pictures

pics_folder = Path('./train_images')

pictures = sorted(list(pics_folder.iterdir()))

print(f"There are {len(pictures)} pictures")There are 203 picturesfig, axes = plt.subplots(3, 4, figsize=(16, 12))

def show_img(im, figsize=None, ax=None):

if not ax: fig, ax = plt.subplots(figsize=figsize)

ax.imshow(im)

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

return ax

for i, ax in enumerate(axes.flat):

im = Image.open( pictures[i*10] )

ax = show_img(im, ax=ax)

As you can see the pictures are very varied. Different cameras, lighting conditions, focus, resolutions and sizes…

Upload the pictures to Custom Vision Service

uploaded_images_df = pd.DataFrame(columns=["image_name", "image_id", "image_status"])upload_batch = Upload_Images_1by1(pictures)uploaded_images_df = pd.DataFrame(upload_batch)

uploaded_images_df| image_name | image_id | image_status | |

|---|---|---|---|

| 0 | 41.JPG | 452a0b58-0dc5-41ff-83d1-8d1ae7bd5d1c | OK |

| 1 | CIMG0030.JPG | 96b7774e-f5ad-4591-aa71-99ad5c71135e | OK |

| 2 | CIMG0031.JPG | 3027bc7e-6e21-4b13-a7d7-bb7e08ce6824 | OK |

| 3 | CIMG0056.JPG | 1320ab2e-3405-4853-bd7e-b0ef0f915d4b | OK |

| 4 | CIMG0059.JPG | aa67eceb-3db0-4026-bf16-0842c006e6ac | OK |

| ... | ... | ... | ... |

| 198 | torre cerro el pavon 075.jpg | b6dd061a-a68d-4d91-a39f-711968445571 | OK |

| 199 | torre cerro el pavon 080.jpg | d12264cf-3d7b-469c-9445-da8dce8dabef | OK |

| 200 | torre cerro el pavon 085.jpg | c6d587fe-5f3a-46ea-bc04-7ff54f10b4ae | OK |

| 201 | torre cerro el pavon 086.jpg | ea34cbad-8d50-4b5f-aed0-91d7fe40a754 | OK |

| 202 | torre cerro el pavon 087.jpg | 6e274dfc-411a-4bf3-9151-51b96f662248 | OK |

203 rows × 3 columns

print(f"{sum(uploaded_images_df.image_status != 'OK')}

images failed when uploading")0 images failed uploadingSave a csv:

uploaded_images_df.to_csv('20221012_203_Images_Uploaded.csv', index=False)Part 1.3. Explore Data from Custom Vision Service

Get id’s of uploaded images

train_images = training_client.get_images(

project_id=custom_vision_project.id,

take=250,

skip=0

)print(f"There are {len(train_images)} training images in the service.")

print(f"Each image has a type of {type(train_images[0])}.")There are 203 training images in the service.

Each image has a type of <class 'azure.cognitiveservices.vision.customvision.training.models._models_py3.Image'>.Some properties of the image class:

image = train_images[0]

print(f"image.id: {image.id}")

print(f"image.width: {image.width}")

print(f"image.height: {image.height}")image.id: 6e274dfc-411a-4bf3-9151-51b96f662248

image.width: 1188

image.height: 900image.original_image_uri'https://irisprodeutraining.blob.core.windows.net:443/i-f6cb4ba75bbe46a4883669654dc86f3a/o-6e274dfc411a4bf3915151b96f662248?sv=2020-04-08&se=2022-10-16T22%3A23%3A43Z&sr=b&sp=r&sig=ru8DNhvBrpA46oZtmzNP7CRHSkwGugumb3R%2F3IzJaUE%3D'image.resized_image_uri'https://irisprodeutraining.blob.core.windows.net:443/i-f6cb4ba75bbe46a4883669654dc86f3a/i-6e274dfc411a4bf3915151b96f662248?sv=2020-04-08&se=2022-10-16T22%3A23%3A43Z&sr=b&sp=r&sig=U5UQ6tjjdLF5gZHFR6wrrWk8B0w9at4cIUeYyxylx2E%3D'Of course there are no tags yet:

print(f"image.tags: {image.tags}")image.tags: NoneThe images are resized when uploaded

Let’s see the same image locally:

uploaded_images_df[uploaded_images_df.image_id==image.id]| image_name | image_id | image_status | |

|---|---|---|---|

| 202 | torre cerro el pavon 087.jpg | 6e274dfc-411a-4bf3-9151-51b96f662248 | OK |

local_image = uploaded_images_df[

uploaded_images_df.image_id=='6e274dfc-411a-4bf3-9151-51b96f662248'

].image_name.item()

local_image'torre cerro el pavon 087.jpg'im = Image.open(pics_folder / local_image)

im.size(2576, 1952)The local image has a size of (2576, 1952) and was resized to (1188, 900) by the service

Keep track of original size vs. size in the service

To get the real width and height we need to consider EXIF metadata. That’s because local images are sometimes rotated by the viewer with some app viewer.

Size of local images

# The image has some EXIF meta data including information about orientation (rotation)

# https://stackoverflow.com/a/63950647

local_w = []

local_h = []

for image in uploaded_images_df.image_name:

im = Image.open(pics_folder / image)

im = ImageOps.exif_transpose(im)

local_w.append(im.size[0])

local_h.append(im.size[1])local_w[:5], local_h[:5]([640, 1620, 1620, 2160, 2160], [480, 2160, 2160, 1620, 1620])uploaded_images_df['ori_w'] = local_w

uploaded_images_df['ori_h'] = local_h

uploaded_images_df.head(5)| image_name | image_id | image_status | ori_w | ori_h | |

|---|---|---|---|---|---|

| 0 | 41.JPG | 452a0b58-0dc5-41ff-83d1-8d1ae7bd5d1c | OK | 640 | 480 |

| 1 | CIMG0030.JPG | 96b7774e-f5ad-4591-aa71-99ad5c71135e | OK | 1620 | 2160 |

| 2 | CIMG0031.JPG | 3027bc7e-6e21-4b13-a7d7-bb7e08ce6824 | OK | 1620 | 2160 |

| 3 | CIMG0056.JPG | 1320ab2e-3405-4853-bd7e-b0ef0f915d4b | OK | 2160 | 1620 |

| 4 | CIMG0059.JPG | aa67eceb-3db0-4026-bf16-0842c006e6ac | OK | 2160 | 1620 |

Size of images in the service

service_ids = [im.id for im in train_images]

service_w = [im.width for im in train_images]

service_h = [im.height for im in train_images]service_w = {id: w for id, w in zip(service_ids, service_w)}

service_h = {id: h for id, h in zip(service_ids, service_h)}

uploaded_images_df['train_w'] = uploaded_images_df['image_id'].map(service_w)

uploaded_images_df['train_h'] = uploaded_images_df['image_id'].map(service_h)Checking consistency in the ratios

ori_ratio = uploaded_images_df.ori_w / uploaded_images_df.ori_h

train_ratio = uploaded_images_df.train_w / uploaded_images_df.train_h

all(abs(ori_ratio - i_ratio) > .3)FalseImages that has an inconsistent ratio:

uploaded_images_df[abs(ori_ratio - train_ratio) > 0.1]| image_name | image_id | image_status | ori_w | ori_h | train_w | train_h | |

|---|---|---|---|---|---|---|---|

| 179 | TORRE EL TIGRITO 01.jpg | 2563fffe-d621-4799-8e81-6ad57049cdaa | OK | 480 | 640 | 640 | 480 |

im = Image.open( pics_folder / 'TORRE EL TIGRITO 01.jpg' )

show_img(im);

im.size(640, 480)ImageOps.exif_transpose failed for this image.

But if you don’t use it, more images would be inconsistent.

If seems that exif_transpose keep track of manually rotated images.

im = ImageOps.exif_transpose(im)

im.size(480, 640)filter = uploaded_images_df.image_id == '2563fffe-d621-4799-8e81-6ad57049cdaa'

uploaded_images_df.loc[filter, ['ori_w', 'ori_h']] = (640, 480)

uploaded_images_df[filter]| image_name | image_id | image_status | ori_w | ori_h | train_w | train_h | |

|---|---|---|---|---|---|---|---|

| 179 | TORRE EL TIGRITO 01.jpg | 2563fffe-d621-4799-8e81-6ad57049cdaa | OK | 640 | 480 | 640 | 480 |

Exporting csv with size data

uploaded_images_df.sample(10)| image_name | image_id | image_status | ori_w | ori_h | train_w | train_h | |

|---|---|---|---|---|---|---|---|

| 155 | P1100700.JPG | b10efb57-70a4-48d6-a846-121ded4546f8 | OK | 2048 | 1360 | 1355 | 900 |

| 7 | CUMACA 11.jpg | 2c55467b-5de5-4329-91d2-a2fafdedd080 | OK | 2592 | 1944 | 1200 | 900 |

| 49 | IMG_1170.JPG | ce2177ae-d03e-4a61-9dfb-4229542572fe | OK | 480 | 640 | 480 | 640 |

| 141 | MVC-024S.JPG | 9ba84daa-e00c-4975-a07b-3ae23ef8f884 | OK | 640 | 480 | 640 | 480 |

| 136 | Imagen008.jpg | c861b4de-127a-4dc0-84ea-9cb96fb380f2 | OK | 640 | 480 | 640 | 480 |

| 202 | torre cerro el pavon 087.jpg | 6e274dfc-411a-4bf3-9151-51b96f662248 | OK | 2576 | 1952 | 1188 | 900 |

| 145 | P1100611.JPG | 1148d437-fc44-4c51-af4a-4751e242b3b7 | OK | 2048 | 1360 | 1355 | 900 |

| 147 | P1100613.JPG | c9dab11e-0663-42f8-8c93-4e2351b15d4c | OK | 2048 | 1360 | 1355 | 900 |

| 171 | PICT0386.JPG | 0e51a561-b938-48f6-8bc6-3c3bf4c72c44 | OK | 2560 | 1920 | 1200 | 900 |

| 142 | MVC-025S.JPG | a8c2a746-2a65-4872-b7b5-0bd5edf965c9 | OK | 640 | 480 | 640 | 480 |

uploaded_images_df.to_csv('20221015_203_Images_Uploaded_WxH.csv', index=False)Conslusions

- It was straightforward to upload images to the service.

- Big images got resized, but their ratios were kept.

exif_transposeneeds to be used to get the real width and height of the image, which may be different to the original size. For example when the image is rotated manually when looking at it. But somehow it failed with one of the images.